Introduction

Site pagination is a crucial element in web design, appearing in contexts ranging from category pages to article archives, gallery slideshows, and forum threads. For SEO professionals, handling pagination is an inevitable challenge. As websites grow, content must be split across multiple pages to enhance user experience (UX). The goal is to help search engines crawl and understand the relationships between these URLs, ensuring they index the most relevant pages.

Over the years, SEO best practices for pagination have evolved, and several myths have emerged as supposed facts. This guide will debunk these myths, present the optimal ways to manage pagination, review outdated methods, and investigate how to track the KPI impact of pagination.

How Pagination Can Hurt SEO

1. Duplicate Content:

Correct: Pagination can cause duplicate content issues if improperly implemented. This occurs when both a “View All” page and paginated pages exist without a correct rel=canonical or if a page=1 URL is created in addition to the root page.

Incorrect: Properly handled pagination does not create duplicate content. Even if the H1 and meta tags are the same, the actual page content differs, preventing duplication. Search engines expect and understand this.

2. Thin Content:

Correct: Splitting content across multiple pages to increase pageviews and ad revenue can lead to thin content on each page.

Incorrect: Prioritizing user experience by placing a UX-friendly amount of content on each page prevents this issue. Ensure users can easily consume your content without resorting to artificially inflated pageviews.

3. Diluted Ranking Signals:

Correct: Pagination can dilute internal link equity and other ranking signals, such as backlinks and social shares, across multiple pages.

Solution: Use pagination only when necessary to avoid poor user experiences, such as on e-commerce category pages. Add as many items as possible without significantly slowing down the page to minimize the number of paginated pages.

4. Crawl Budget:

Correct: Allowing Google to crawl paginated pages can consume valuable crawl budget.

Solution: Manage pagination parameters in Google Search Console to control how crawl budget is used. This ensures that more important pages receive the necessary attention.

Managing Pagination According to SEO Best Practices

1. Use Crawlable Anchor Links:

Ensure that anchor links with href attributes are used for internal linking to paginated pages. Avoid loading paginated anchor links or href attributes via JavaScript.

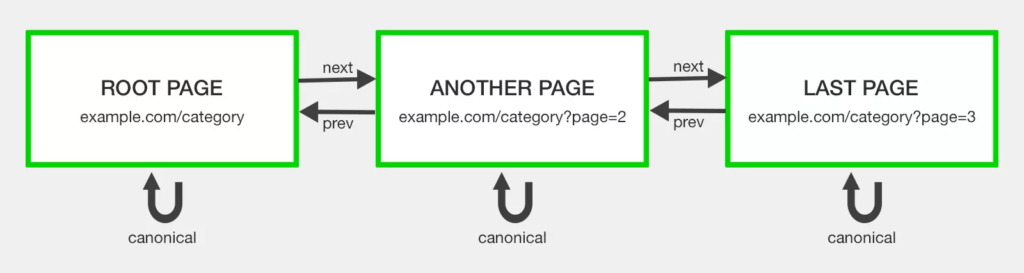

Use rel="next" and rel="prev" attributes to indicate the relationship between component URLs in a paginated series, even though Google no longer uses these link attributes as indexing signals. They still provide value for accessibility and other search engines like Bing.

Complement these with a self-referencing rel="canonical" link for each page. For example, /category?page=4 should have a rel="canonical" to /category?page=4. This indicates that each paginated page is the master copy of its content, preventing duplicate content issues.

2. Modify Paginated Pages On-Page Elements:

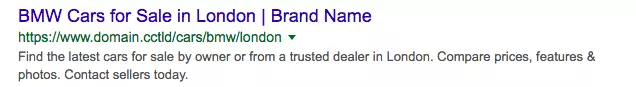

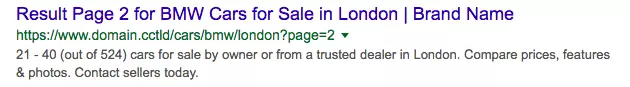

Google treats paginated pages as normal pages, meaning they are eligible to compete against the root page for ranking. To prevent “Duplicate meta descriptions” or “Duplicate title tags” warnings in Google Search Console, make slight modifications to your code.

If the root page uses the formula:

- Root Page Title:

Category - Example Site - Root Page Meta Description:

Explore our extensive category on Example Site.

The successive paginated pages could use:

- Paginated Page Title:

Category - Page 2 - Example Site - Paginated Page Meta Description:

Continue exploring our extensive category on Page 2 of Example Site.

These adjustments help discourage Google from displaying paginated pages in the SERPs over the root page.

- Additionally, consider traditional on-page SEO tactics for further optimization:

- De-optimize paginated page H1 tags.

- Add useful on-page text to the root page, but not to the paginated pages.

- Add a category image with an optimized file name and alt tag to the root page, but not to the paginated pages.

3. Don’t Include Paginated Pages in XML Sitemaps:

While paginated URLs are technically indexable, they are not a priority for SEO. Excluding them from your XML sitemap helps save crawl budget for more important pages.

4. Handle Pagination Parameters in Google Search Console:

Use parameters like ?page=2 instead of static URLs such as /category/page-2. While there is no ranking or crawling advantage, dynamic URLs increase the likelihood of swift discovery by Googlebot.

To avoid crawling traps, ensure that a 404 HTTP status code is sent for any paginated pages not part of the current series. Configure the parameter in Google Search Console to “Paginates” and adjust the crawl settings as needed, without requiring developer assistance.

5. Avoid Misunderstood Solutions:

- Do Nothing: Google believes Googlebot is smart enough to find the next page through links, so doesn’t need any explicit signal. The message to SEO’s is essentially, handle pagination by doing nothing. While there is a core of truth to this statement, by doing nothing you’re gambling with your SEO. Many sites have seen Google select a paginated page to rank over the root page for a search query. There’s always value in giving clear guidance to crawlers how you want them to index and display your content.

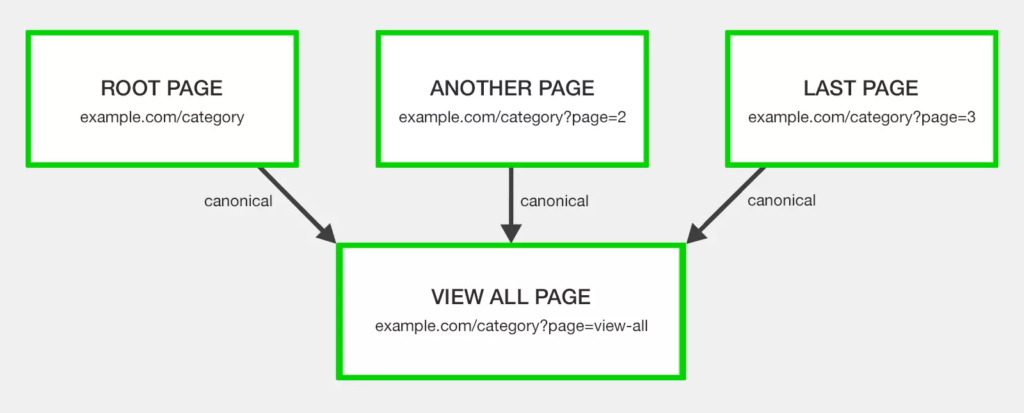

- Canonicalize to a View All Page: Only use this if it offers a good user experience. Otherwise, stick to proper pagination.

- Canonicalize to the First Page: Each paginated page should self-reference unless a View All page is used. Incorrect canonicalization can misdirect search engines and lead to content dropping out of the index.

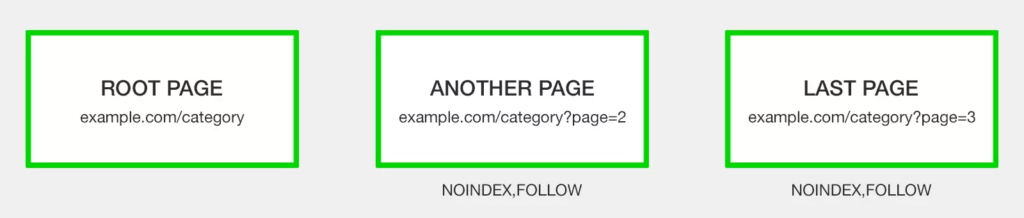

- Noindex Paginated Pages: This method prevents paginated content from being indexed, but long-term use can cause Google to nofollow the links on those pages, removing linked content from the index.

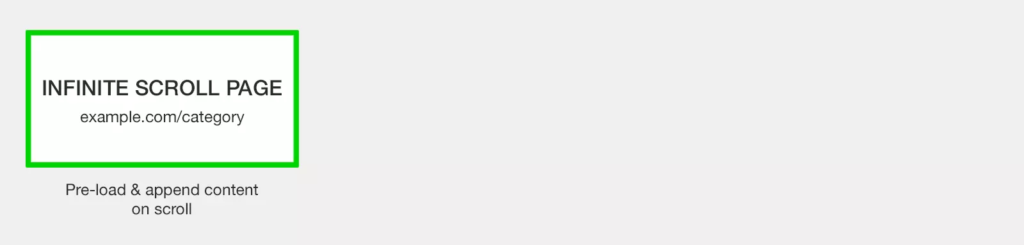

6. Use Infinite Scrolling or Load More Carefully:

Infinite scroll and load more buttons improve user experience but are not naturally SEO-friendly. Convert these functionalities to an equivalent paginated series accessible even with JavaScript disabled.

As users scroll or click, adapt the URL in the address bar to the corresponding paginated page and implement a pushState for actions resembling a page turn. This approach maintains SEO best practices while enhancing user experience.

7. Discourage or Block Pagination Crawling:

If crawl budget conservation is a priority, use methods like robots.txt disallow or Google Search Console settings to block crawling of paginated URLs. This approach should be paired with well-optimized XML sitemaps to ensure essential content is indexed.

There are three ways to block crawlers:

- Add

nofollowto all links pointing to paginated pages (messy approach). - Use a

robots.txtdisallow (cleaner approach). - Set paginated page parameter to “Paginates” and configure Google to crawl “No URLs” in Google Search Console (no developer needed).

Tracking the KPI Impact of Pagination

1. Benchmark Data Sources:

- Server Log Files: Track the number of paginated page crawls.

- Site Search Operator: Use

site:example.com inurl:pageto check how many paginated pages are indexed. - Google Search Console Search Analytics Report: Filter by paginated pages to understand impressions.

- Google Analytics Landing Page Report: Filter by paginated URLs to assess on-site behavior.If you see an issue getting search engines to crawl your site pagination to reach your content, you may want to change the pagination links.

2. Post-Optimization:

After implementing best practice pagination handling, revisit these data sources to measure the success of your efforts. Track improvements in crawl efficiency, indexing, and user engagement metrics to evaluate the impact of your optimizations.

By following these detailed best practices, you can manage pagination effectively, ensuring a better user experience and optimal SEO performance. This approach helps search engines understand and index your content accurately, ultimately enhancing your website’s visibility and ranking.